Is There Bias in AI and ML?

The tremendous influence of artificial intelligence (AI) and machine learning (ML) is undeniable. Advanced technology is now not only a form of innovation but an essential component making up the very fabric of our changing society. AI and ML have thoroughly embedded themselves in the center of our professional, personal, and even philosophical spheres of existence, significantly changing the contours of decisions, policies, and human experiences.

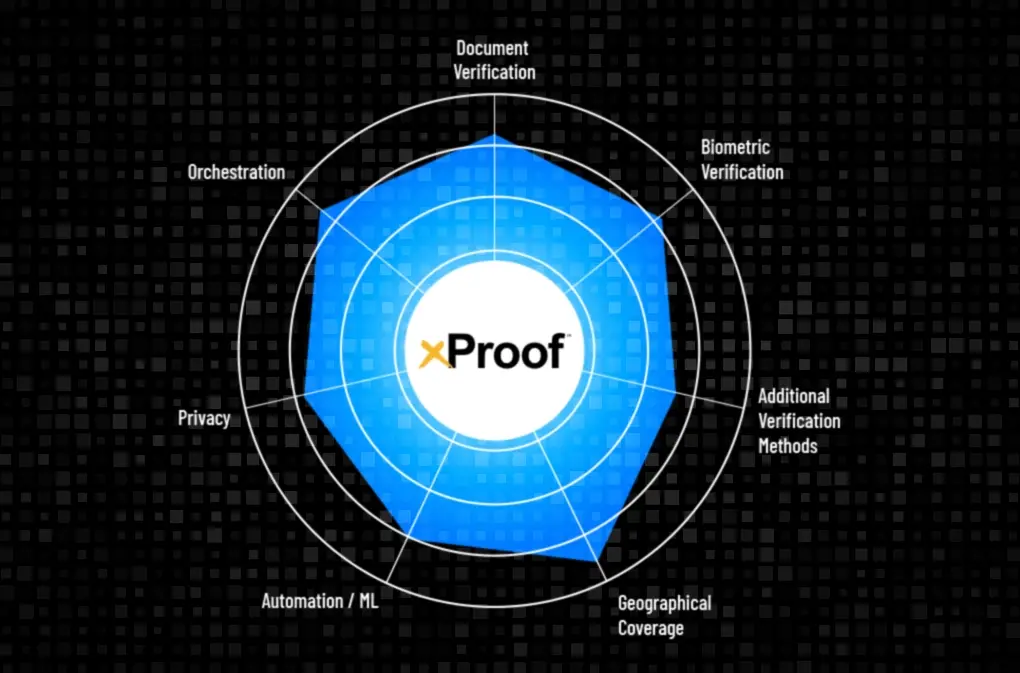

As we stand on the verge of this technological revolution, we’re witnessing AI and ML’s widespread reach extending across a wide range of industries. Whether it’s the sophisticated world of predictive analytics, which uses historical data to forecast potential scenarios to help drive strategic decisions, or the advanced domain of biometrics, which ensures secure and rapid identity verification, these technologies’ footprints are everywhere.

But as we marvel at these advancements and the opportunities they promise, it’s imperative for SMEs, specialists, developers, and end-users alike to pause and reflect on a critical consideration. Beyond the algorithms, codes, and datasets lies a question that challenges the ethical and functional foundation of these technologies: Is there an inherent bias in AI and ML? This query doesn’t merely seek a binary answer; it beckons a deep dive into understanding the nuances, implications, and potential remedies for what could be one of the most defining issues in the AI and ML landscape.

Bias in AI and ML: the underlying issue

In the ever-evolving discourse surrounding AI and ML, the issue of bias isn’t just a theoretical possibility or an abstract speculation—it’s a verifiable and well-documented challenge. At the heart of this issue is the profound realization that AI and ML, despite their foundations in complex algorithms and mathematical precision, don’t spontaneously emerge in an isolated, untainted environment. Rather, their genesis and evolution are deeply rooted in the vast datasets harvested from a myriad of human activities and decisions. The caveat here—and one that we must approach with both caution and cognizance—is that these datasets can be, and often are, mirrors reflecting historical, societal, and systemic biases that have persisted over time.

Take, for example, the intricate and high-stakes domain of biometrics, a field where the slightest error can have wide-reaching ramifications. Facial recognition, a subset of biometrics, has been thrown into the limelight, owing to its growing applications in security, identity verification, authentication, and more. Empirical studies and real-world applications have repeatedly demonstrated that these technologies, despite their cutting-edge design, exhibit a worrying trend: they disproportionately misidentify people of color. Such disparities aren’t mere statistical anomalies; they’re indicative of a deeper, more systemic issue. When these flawed or skewed algorithms are harnessed by entities like law enforcement or other authoritative institutions, the implications aren’t just technical—they’re profoundly societal. The risks run deep, from wrongful accusations to the erosion of trust in these technological tools and the organizations which yield them. The stakes, therefore, are elevated, compelling us to inspect and address the root of these biases, lest we inadvertently amplify them in our quest for technological advancement.

The root causes are more than just data

On the surface, it may appear straightforward to pinpoint the origins of bias in AI and ML solely as imperfect or skewed datasets. A simplistic understanding might lead one to believe that ‘cleaning up’ these datasets might be the panacea for all issues related to bias. However, a deeper exploration, fortified by comprehensive research from reputable institutions like the National Institute of Standards and Technology (NIST), reveals a tapestry of causative factors, each intertwined and often compounding the other.

Bias in AI and ML isn’t a singular entity with a unidirectional source; it’s a multifaceted challenge, much like a many-headed hydra. At its core, the issue doesn’t simply stem from numerical discrepancies or errant data points. Rather, it emerges from an intricate confluence of technical, human, and systemic elements. While the overt biases—those which are visible, quantifiable, and often headline-worthy due to their presence in data or algorithmic design—capture most of our attention, they are merely the apex of a vast and submerged structure. This is the proverbial ‘tip of the iceberg.’

Hidden beneath this visible surface is a labyrinthine world of deeply entrenched human biases, often birthed from individual prejudices, beliefs, or misperceptions. These insidious biases can seep into the decision-making processes, design protocols, and, ultimately, the AI and ML systems. But beyond the individual lies an even larger behemoth: systemic biases. These are biases that have taken root within institutional practices, often fortified over time and reflective of larger societal structures and inequalities. They can escape the lens of cursory examinations, and yet their impact can be the most profound and far-reaching.

Thus, as we navigate the challenges of bias in AI and ML, it is paramount for us—industry leaders, researchers, and stakeholders—to expand our understanding beyond just the evident and delve deeper into the multi-layered nuances that contribute to these biases. Only with such a comprehensive and holistic perspective can we hope to truly address and mitigate their impacts.

Navigating digital identity through NIST SP 800-63-4 guidelines with an emphasis on bias concerns

The NIST SP 800-63-4 guidelines focus on digital identity, encompassing identity verification, authentication, and federation. In the age of digital transformation, the integrity of digital identity has taken center stage, ensuring seamless yet secure transactions and customer interactions in the virtual realm. It’s become paramount to construct robust guidelines that not only focus on the mechanics of identity validation but also on the intrinsic fairness of such processes. NIST’s guidelines provide a comprehensive framework that addresses digital identity practices while proactively identifying and addressing concerns related to bias in these systems and can be distilled into the following main points.

Identity verification

- Risk of Misidentification: One of the concerns is the impact of providing a service to the wrong individual, such as a fraudster successfully impersonating someone else.

- Barriers to Access: Another concern is not providing service to an eligible user, due to various barriers, including biases, that a user might face during the identity verification process.

- Excessive Data Collection: The guidelines also highlight concerns about collecting and retaining excessive amounts of information to support identity verification, which could lead to biases if data collection isn’t equitably distributed across populations.

Authentication

- Erroneous Authentication: The impact of authenticating the wrong user is a concern, particularly if an attacker compromises or steals an authenticator.

- Failure to Authenticate Legitimate Users: Just as with identity verification, there’s a worry about barriers, including biases, that prevent the correct user from being authenticated when presenting their authenticator.

Federation

- Unauthorized Access: The guidelines express concerns about the wrong user successfully accessing an application, system, or data, such as by compromising or replaying an assertion.

- Data Misdirection: There’s a risk associated with releasing user attributes to the incorrect application or system.

Holistic risk evaluation

- The guidelines stress the importance of understanding the potential impacts of failure during identity verification, authentication, and federation. Entities need to consider the ripple effects of harm, which may disproportionately affect certain groups and thereby introduce biases.

Bias in emerging methods

- There’s an emphasis on understanding and addressing potential biases in emerging methods like fraud analytics, risk scoring, and new identity verification technologies. It’s essential to ensure these methods don’t inadvertently introduce or perpetuate bias.

Privacy and equity considerations

- Alongside the technical aspects, the guidelines also highlight the need to address accompanying privacy and equity considerations, emphasizing the importance of fairness and avoiding discriminatory outcomes.

Performance evaluation

- Questions are raised about the sufficiency of current testing programs, especially concerning liveness detection and presentation attack detection. These evaluations are crucial in understanding and mitigating biases that might affect the performance of implementations.

In essence, the NIST SP 800-63-4 guidelines underscore the importance of ensuring that digital identity processes are not only secure and reliable but also fair and free from biases that might disadvantage or harm certain individuals or groups.

Top strategies to reduce bias in AI and ML

The data used to train these systems, the algorithms themselves, and the social contexts in which they are developed and deployed can all contribute to bias. Effectively combating prejudice requires a multifaceted strategy.

Diverse data collection

Ensure that the training data is representative of the diverse scenarios in which the AI or ML model will operate. Data should adequately represent all relevant groups and conditions.

Bias detection and auditing

Use tools and techniques to detect, quantify, and visualize biases in training data and in model predictions. Regularly audit and monitor AI and ML systems after deployment to ensure they are performing fairly across different groups.

Transparent and explainable AI

Employ models that provide insight into their decision-making processes. Transparency can help developers, users, and affected parties understand AI decisions and can facilitate the identification and correction of biases.

Engage diverse teams

Assemble diverse development teams. A heterogeneous group of researchers and developers can provide multiple perspectives that help in recognizing potential pitfalls and biases that might be overlooked by a homogenous group.

Feedback loops

Create mechanisms for continuous feedback from users and those affected by AI and ML decisions, ensuring that any biases that emerge in real-world usage are identified and addressed quickly.

Regulatory oversight and guidelines

Stay updated with guidelines and best practices set by professional organizations, governmental bodies, and industry leaders. Some countries and industries are establishing regulatory standards to ensure fairness and transparency in AI.

Socio-technical perspective

Recognize that technical solutions alone are insufficient. Consider societal, ethical, and human factors when designing, deploying, and auditing AI and ML systems.

Ethics and fairness training

Ensure that AI and ML practitioners receive training in ethics and fairness issues to make them aware of the potential pitfalls and challenges of developing responsible AI.

Use fairness-enhancing interventions

Deploy techniques explicitly designed to enhance fairness in AI and ML models. These may include methods like re-sampling, re-weighting, and algorithmic modifications to improve fairness metrics.

Public engagement and collaboration

Engage the broader public and solicit input from diverse stakeholders. This can provide insights into potential biases and fairness concerns that might not be apparent to developers or industry insiders.

Documentation and reporting

Maintain detailed documentation about the data, training processes, model decisions, and any measures taken to address bias and fairness. This can serve as a reference for future audits and for other teams working on similar problems.

Open source and peer review

If possible, make datasets, models, or algorithms available for peer review. The wider AI community can often provide insights or identify issues that might be missed by the original developers.

Incorporating these suggestions requires a commitment to ethics and impartiality in AI and ML, as well as a sustained effort. Continuous advancements in the field require preserving and enhancing the fairness of AI systems.

The ethical imperative of AI

Like any powerful tool, the impact of AI and ML is shaped not just by their capabilities, but by the values, intentions, and diligence of those who wield them. Bias in AI is more than a technical glitch; it’s a reflection of the complexities of our human society. Each instance of bias that slips into AI and ML systems represents not just an algorithmic oversight but a missed opportunity for creating a more inclusive future.

As we stand at this crucial juncture, the decisions we make will determine the trajectory of AI’s influence on society. It’s tempting to get enamored by the allure of rapid innovations, but it’s even more critical to pause and introspect. Addressing bias isn’t a mere box to tick off; it’s a commitment to the essence of equitable progress, to a future where AI and ML amplify the best of humanity and conscientiously curtail its limitations.

Nowhere is this commitment more crucial than in the realm of identity verification and biometrics. As we increasingly inhabit digital landscapes and our online personas begin to hold as much significance, if not more, than our physical identities, the onus is on us to ensure the equitable treatment of every individual by AI systems. It’s no longer just about precision; it’s about justice, inclusivity, and respect. Each misidentification or misjudgment by an AI system, particularly in biometrics, doesn’t just represent a technical error; it bears the weight of potential real-world ramifications, from lost opportunities to unjust treatments.

Reva Schwartz of NIST encapsulated this sentiment succinctly: to truly build and sustain trust in AI ecosystems, we must be relentless in our pursuit of addressing every conceivable factor that could erode public faith. This endeavor is neither straightforward nor finite. It’s a multifaceted journey, marked by constant learning, recalibration, and collaboration across sectors, disciplines, and geographies.