Profile of a Modern Fraudster

Here's a look at the modern fraudster and how to beat them at their own game.

As 2024 begins, online fraud protection is top-of-mind for both businesses and their customers – and with good reason. The picture of who is committing fraud has changed. While we may still think of a fraudster as a person wielding their technical skills to steal information and money for their own amusement or to fund their personal dreams and vices, today, it’s more likely to be a group or person who bought software that doesn’t require technical skill to use and was developed by a criminal organization. Stealing data and profiting from it has become an enormous, global industry.

After the Colonial Pipeline in the U.S. was taken over by a group of hackers demanding ransom and many other headline-grabbing attacks, it became clearer that any business could be victimized. In its most recent U.S. Identity and Fraud report, Experian found that over 50 percent of businesses have a high level of concern about online fraud scams.

Kroll interviewed executives around the globe for its 2023 Fraud and Financial Crime report, and 69 percent expect financial crime risks to increase over the next 12 months, primarily driven by cybersecurity and data breaches. Even in the EU, which has strong anti-fraud regulations, the European Commission found in its 2022 Annual Report on the protection of the EU’s financial interests (PIF) that cases of fraud and irregularities increased slightly, with a cost of €1.77 billion.

Identity fraud rises as the cybercrime that provides much of the stolen information used in the fraud attempts also rises. CFO reported that 75 percent of security professionals had seen an uptick in cybercrime attacks over the past year. According to Statista, 72 percent of businesses worldwide were affected by ransomware attacks in 2023. Cybersecurity Ventures predicts the global annual cost of cybercrime will reach $9.5 trillion this year.

But how do these annual costs translate into damage for individual businesses and people? Depending on financial circumstances, the costs of cybercrime scams and identity theft can be the difference between keeping a business open and closing its doors; it can also mean a family meets their monthly bills or falls dangerously short.

In its Cost of a Data Breach 2023 report, IBM found that the global average cost of a data breach was $4.45 million, an increase of 15 percent over three years. According to the Identity Theft Resource Center, of small businesses that fell victim to cybercrime, 47 percent lost under $250,000, 26 percent lost between $250,000 and $500,000, and 13 percent lost over $500,000. The National Council on Identity Theft Protection reported that the median cost of an identity theft incident for an individual is $500.

Whether we run a business or are individuals shopping, banking, and interacting online, we are constantly needing fraud protection from a new, professional class of fraudsters.

Running Fraud as A Business

Today, much cybercrime and fraud are committed by large ransomware organizations that run scams with their own “staff” and also sell “cybercrime as a service” (CaaS) solutions to affiliate groups and individuals. With these online information services scams, a person working alone no longer needs to have sophisticated technical skills or develop their own software to steal credentials/data and use them to commit profitable fraud.

Europol recently published an infographic with the business structure of a ransomware group that includes four business tiers: an affiliate program, a core group, a second tier of employees, and a tier of offered services. Employee titles include:

- Senior Managers

- Back-end Developers

- Reverse Engineers

- Human Resources Associates

- Recruiters

- Paralegals

- Ransom Negotiators

Among the listings in the services tier are money laundering, initial access brokers who infiltrate systems through software bugs and stolen credentials and sell that access to other criminals, and malware dropper and botnet services.

An example of such a ransomware group is LockBit, which claims among the victims of its software in 2023, Boeing, The Royal Mail, and the Industrial and Commercial Bank of China. It was the subject of a June 2023 joint Cybersecurity Advisory issued by government cybersecurity agencies in the U.S., Canada, Australia, New Zealand, Germany, France, and the UK.

The advisory noted that in 2022, “LockBit was the most active global ransomware group and ransomware as a service (RaaS) provider in terms of the number of victims claimed on their data leak site” and that it continued to be prolific in 2023.

Online fraud methods it cited for how the group attracts affiliates to use its ransomware included paying those affiliates before taking a cut for its core group, disparaging other RaaS groups in online forums, publicity stunts such as paying people to get LockBit tattoos, and putting a $1 million bounty on information related to the real-world identity of LockBit’s lead, and providing ransomware with a simple point-and-click interface that people with a lower degree of technical skill can use.

LockBit may be the most active ransomware attack group built on a business infrastructure, but it’s not alone. There are many active existing groups, and new organizations emerge every day, putting a wealth of ready-made solutions and services in the hands of fraudsters.

Taking Advantage of The Latest Technology

In addition to the commercialization of ransomware lowering the barrier to entry, modern fraudsters also have technological advances in artificial intelligence (AI) and machine learning (ML) that make it easier for them to commit crimes. It’s important to be aware of these advancements and learn the fraud risks associated with technology.

For example, the same generative AI capabilities that marketing teams are using to create content and email campaigns for their customers are being used by scammers to perfect their phishing emails. In the past, these emails often contained errors or strange language that clued recipients into the fact that they weren’t real emails from their bank, their tax authority, the IT department, HR, or their boss, typically asking them to reconfirm personal data or login credentials.

With generative AI, these emails are personalized, error-free, and harder to identify as illegitimate – and they can be created in just a few minutes. In a recent survey of senior cybersecurity stakeholders by Abnormal Security, over 80 percent of respondents confirmed that their organizations have either already received AI-generated email attacks or strongly suspect this is the case.

Automation expands the reach of fraudsters by performing tasks without human intervention. Whether a fraudster is using a botnet service from a CaaS group or a bot they created on their own, automation lets them attack more people or businesses while their identity remains undetected.

Take, for example, credential stuffing attacks, where usernames and passwords exposed in a data breach, stolen from individuals, or purchased from the dark web are entered into thousands of websites hoping to gain access to additional accounts. It would take a person a long time to manually enter usernames and passwords on each site, but a fraud bot can enter multiple requests each second, making it possible to use longer lists of credentials against more websites in any attack.

Deepfake technology, which enables fraudsters to impersonate another person’s visual likeness and voice, isn’t new. But AI has made it easier to create deepfakes and harder to detect them. Deepfakes have made headlines in the past year as concerns over their use for disinformation have increased. You may have seen examples of deepfakes featuring celebrities, such as Taylor Swift’s voice and image inviting people to a supposed Le Creuset giveaway, the “This is not Morgan Freeman” video that went viral early last year, and the “Heart on My Sleeve” collaboration between the Weeknd and Drake in which neither actually participated. These fakes can be very convincing.

Criminals know this and are using deepfakes to commit identity fraud like never before. They can more readily create synthetic identities for people who don’t actually exist by pairing deepfake facial images with AI-generated background information and identity documents and then use them to open new accounts to scam banks and other businesses. They can try to circumvent the biometric authentication systems that are used to increase security on legitimate users’ accounts with deepfake factors, such as synthetic facial scans.

They can even try to trick voice recognition systems with audio deepfakes built from podcasts, webinars, social media videos, or phishing calls. (A three-second clip of someone speaking is all that’s needed for the latest pre-trained algorithms to recreate a person’s voice, according to University College London.)

Combatting Modern Fraudsters with AI-Powered Biometrics

Fighting the AI-powered crimes committed by modern fraudsters takes AI fraud detection solutions. Biometric verification is known to provide better security than passwords for users (whether employees or customers) to access data, systems, accounts, and networks. When it integrates AI technology, biometric verification provides a stronger measure of protection against fraudsters, whether they’re trying to spoof employee and customer credentials or open fake accounts with an identity they’ve created.

AI improves the success of liveness detection features that can distinguish facial scans presented for access by real people from those of AI-generated images, as well as ones taken from photos or videos.

It also improves the ability to distinguish AI-generated documents (during document verification) from genuine ID documents when a fraudster attempts to open an account with a synthetic identity. These technologies also enable adaptive synthetic voice protection that detects the cues that can indicate a voice is digitally generated.

ML enables these biometric solutions to continually improve, learning from each interaction and adapting as fraudsters try increasingly sophisticated attacks.

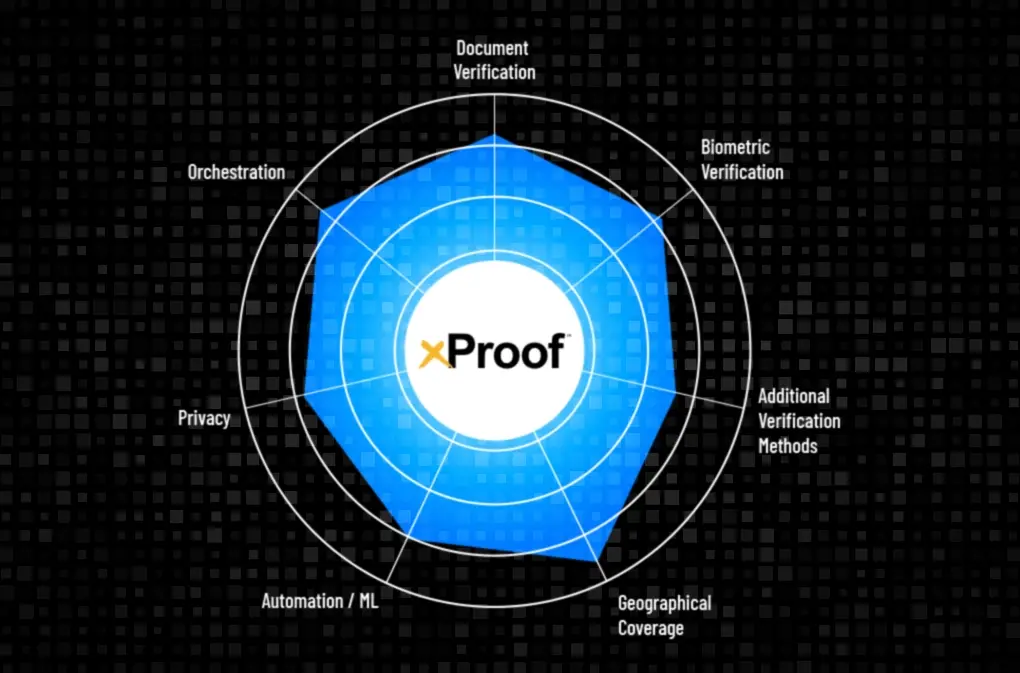

Daon brings patented AI and ML algorithms and technologies to the biometric authentication and proofing that protects some of the world’s most iconic brands – and their customers. See how our fraud protection products can future-proof your business against modern fraudsters.